Sensors

GigaPixel Camera: Emerging applications in virtual museums, cultural heritage, and digital art preservation require very high quality and high resolution imaging of objects with fine structure, shape, and texture. To this end we propose to use large for- mat digital photography. We analyze and resolve some of the unique challenges that are presented by digital large format photography, in particular sensor-lens mismatch and extended depth of field. Based on our analysis we have designed and built a digital tile-scan large format camera capable of acquiring high quality and high resolution images of static scenes. [Project Page]

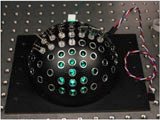

LED Only BRDF Measurement: Light Emitting Diodes (LEDs) can be used as light detectors and as light emitters. In this paper, we present a novel BRDF measurement device consisting exclusively of LEDs. Our design can acquire BRDFs over a full hemisphere, or even a full sphere (for the bidirectional transmittance distribution function BTDF) , and can also measure a (partial) multi-spectral BRDF. Because we use no cameras, projectors, or even mirrors, our design does not suffer from occlusion problems. It is fast, significantly simpler, and more compact than existing BRDF measurement designs. (With Jiaping Wang, Bennett Wilburn, Xiaoyang Li and Le Ma). [Project Page]

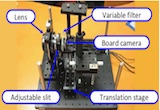

Condenser based BRDF Measurement: We develop a hand-held, high-speed BRDF capturing device for phase one measurements. A condenser-based optical setup collects a dense Hemisphere of rays emanating from a single point on the target sample as it is manually scanned over it, yielding 10 BRDF point measurements per second. (With Yue Dong, Jiaping Wang, Xin Tong, John Snyder, Yanxiang Lan, Baining Guo). [Project Page]

Modular Wireless Smart Camera: Most digital smart cameras are closed and relatively computationally weak systems. This results from the tradeoff between cost vs. flexibility and computational power. Additionally these cameras are often powered by an FPGA or a DSP and, operated by an embedded OS - if one exists at all. These aspects make commercially smart cameras very uncomfortable for research and prototyping where flexibility and ease of use are of utmost importance. In contrast, PC104/PC104+ is an open and popular standard / compact form factor for embedded system. By using the standard we can design a modular smart camera that with large range of computation power and peripheral components, including wireless communication. [Project Page]

Hybrid Camera: Motion blur due to camera motion can significantly degrade the quality of an image. Since the path of the camera motion can be arbitrary, deblurring of motion blurred images is a hard problem. In this project, we exploit the fundamental trade-off between spatial resolution and temporal resolution to construct a hybrid camera that can measure its own motion during image integration. The acquired motion information is used to compute a point spread function (PSF) that represents the path of the camera during integration. This PSF is then used to deblur the image. This prototype system was evaluated in different indoor and outdoor scenes using long exposures and complex camera motion paths. The results show that, with minimal resources, hybrid imaging outperforms previous approaches to the motion blur problem. (With Shree K. Nayar). [Project Page]

High-resolution Hyperspectral Imaging via Matrix Factorization: In this work, we introduce a simple new technique for reconstructing a very high-resolution hyperspectral image from two readily obtained measurements: A lower-resolution hyperspectral image and a high-resolution RGB image. Our approach is divided into two stages: We first apply an unmixing algorithm to the hyperspectral input, to estimate a basis representing reflectance spectra. We then use this representation in conjunction with the RGB input to produce the desired result. (With Rei Kawakami, John Wright, Yu-Wing Tai, Yasuyuki Matsushita, and Katsushi Ikeuchi). [Project Page]

Jitter Camera: The resolution of videos can be computationally enhanced by moving the camera and applying super-resolution algorithms. However, a moving camera introduces motion blur, which limits the quality of super-resolution. To overcome this limitation, we have developed a novel camera called the "jitter camera". The jitter camera produces shifts between consecutive video frames without introducing any motion blur. This is done by shifting the video detector instantaneously and timing the shifts to occur between pixel integration periods. The videos captured by the jitter camera are processed by an adaptive super-resolution algorithm that handles complex dynamic scenes in a robust manner producing a video that has a higher resolution than the captured one. (With Assaf Zomet and Shree K. Nayar). [Project Page]

Segmentation with Invisible Keying Signal: Chroma keying is the process of segmenting objects from images and video using color cues. i A blue (or green) screen placed behind an object during recording is used in special effects and in virtual studios. A different background later replaces the blue color. A new method for automatic keying using invisible signal is presented. The advantages of the new approach over conventional chroma keying are unlimited color range for foreground objects and no foreground contamination by background color. The method can be used in real-time and no user assistance is required. A new camera design and a single chip sensor design for keying are also presented. [Project Page]

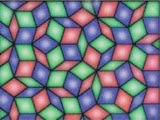

Penrose Pixels: We present a novel approach to reconstruction based super- resolution that explicitly models the detector's pixel layout. Pixels in our model can vary in shape and size, and there may be gaps between adjacent pixels. Furthermore, their layout can be periodic as well as aperiodic, such as Penrose tiling or a biological retina. We also present a new variant of the well known error back-projection super-resolution algorithm that makes use of the exact detector model in its back projection operator for better accuracy. Our method can be applied equally well to either periodic or aperiodic pixel tiling. (with Zhouchen Lin and Bennett Wilburn) [Project Page]

EagleVision: Smart camera hardware and software to be used for traffic light control. Each camera can replace up to 8 physical loops. The camera uses machine vision and machine learning to be robust to various conditions including illumination change, weather change and wind. The product outperformed similar existing products. Proprietary technology, never published. (With Ramesh Visvanathan, Siemens ITS). [Product Brochure (PDF)]